Backport #27887 by @Zettat123

We should not use `asset.ID` in DownloadFunc because DownloadFunc is a

closure.

1bf5527eac/services/migrations/gitea_downloader.go (L284-L295)

A similar bug when migrating from GitHub has been fixed in #14703. This

PR fixes the bug when migrating from Gitea and GitLab.

Co-authored-by: Zettat123 <zettat123@gmail.com>

Backport #27856

The only conflict is `ThemeName` in `500.tmpl`, it has been resolved

manually by keeping using old

`{{.SignedUser.Theme}}{{else}}{{DefaultTheme}}`

Backport #27787 by @KN4CK3R

Add missing `.Close()` calls. The current code does not delete the

temporary files if the data grows over 32mb.

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Backport #27266 by @merlleu

Hello there,

Cargo Index over HTTP is now prefered over git for package updates: we

should not force users who do not need the GIT repo to have the repo

created/updated on each publish (it can still be created in the packages

settings).

The current behavior when publishing is to check if the repo exist and

create it on the fly if not, then update it's content.

Cargo HTTP Index does not rely on the repo itself so this will be

useless for everyone not using the git protocol for cargo registry.

This PR only disable the creation on the fly of the repo when publishing

a crate.

This is linked to #26844 (error 500 when trying to publish a crate if

user is missing write access to the repo) because it's now optional.

Co-authored-by: merlleu <r.langdorph@gmail.com>

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Backport #27655 by @wolfogre

When `webhook.PROXY_URL` has been set, the old code will check if the

proxy host is in `ALLOWED_HOST_LIST` or reject requests through the

proxy. It requires users to add the proxy host to `ALLOWED_HOST_LIST`.

However, it actually allows all requests to any port on the host, when

the proxy host is probably an internal address.

But things may be even worse. `ALLOWED_HOST_LIST` doesn't really work

when requests are sent to the allowed proxy, and the proxy could forward

them to any hosts.

This PR fixes it by:

- If the proxy has been set, always allow connectioins to the host and

port.

- Check `ALLOWED_HOST_LIST` before forwarding.

Co-authored-by: Jason Song <i@wolfogre.com>

Backport #27544 by @earl-warren

- Currently in the cron tasks, the 'Previous Time' only displays the

previous time of when the cron library executes the function, but not

any of the manual executions of the task.

- Store the last run's time in memory in the Task struct and use that,

when that time is later than time that the cron library has executed

this task.

- This ensures that if an instance admin manually starts a task, there's

feedback that this task is/has been run, because the task might be run

that quick, that the status icon already has been changed to an

checkmark,

- Tasks that are executed at startup now reflect this as well, as the

time of the execution of that task on startup is now being shown as

'Previous Time'.

- Added integration tests for the API part, which is easier to test

because querying the HTML table of cron tasks is non-trivial.

- Resolves https://codeberg.org/forgejo/forgejo/issues/949

(cherry picked from commit fd34fdac14)

Co-authored-by: Earl Warren <109468362+earl-warren@users.noreply.github.com>

Co-authored-by: Gusted <postmaster@gusted.xyz>

Co-authored-by: KN4CK3R <admin@oldschoolhack.me>

Co-authored-by: silverwind <me@silverwind.io>

Backport #27486 by @lunny

Fix#27204

This PR allows `/<username>/<reponame>/attachments/<uuid>` access with

personal access token and also changed attachments API download url to

it so it can be download correctly.

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

Backport #27400 by @fantognazza

With this PR we added the possibility to configure the Actions timeouts

values for killing tasks/jobs.

Particularly this enhancement is closely related to the `act_runner`

configuration reported below:

```

# The timeout for a job to be finished.

# Please note that the Gitea instance also has a timeout (3h by default) for the job.

# So the job could be stopped by the Gitea instance if it's timeout is shorter than this.

timeout: 3h

```

---

Setting the corresponding key in the INI configuration file, it is

possible to let jobs run for more than 3 hours.

Signed-off-by: Francesco Antognazza <francesco.antognazza@gmail.com>

Backport #27265 by @JakobDev

Part of #27065

This PR touches functions used in templates. As templates are not static

typed, errors are harder to find, but I hope I catch it all. I think

some tests from other persons do not hurt.

Co-authored-by: JakobDev <jakobdev@gmx.de>

Backport #26521 by @JakobDev

Blank Issues should be enabled if they are not explicit disabled through

the `blank_issues_enabled` field of the Issue Config. The Implementation

has currently a Bug: If you create a Issue Config file with only

`contact_links` and without a `blank_issues_enabled` field,

`blank_issues_enabled` is set to false by default.

The fix is only one line, but I decided to also improve the tests to

make sure there are no other problems with the Implementation.

This is a bugfix, so it should be backported to 1.20.

Co-authored-by: JakobDev <jakobdev@gmx.de>

Backport #27182 by @jolheiser

Resolves#27180

`URL` points to the API URL, `HTMLURL` points to the web page.

Notably, however, for PRs they are the same URL. I switched them to use

HTMLURL to match the rest of the codebase terminology.

Co-authored-by: John Olheiser <john.olheiser@gmail.com>

This PR adds a new field `RemoteAddress` to both mirror types which

contains the sanitized remote address for easier (database) access to

that information. Will be used in the audit PR if merged.

Part of #27065

This reduces the usage of `db.DefaultContext`. I think I've got enough

files for the first PR. When this is merged, I will continue working on

this.

Considering how many files this PR affect, I hope it won't take to long

to merge, so I don't end up in the merge conflict hell.

---------

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fix#26723

Add `ChangeDefaultBranch` to the `notifier` interface and implement it

in `indexerNotifier`. So when changing the default branch,

`indexerNotifier` sends a message to the `indexer queue` to update the

index.

---------

Co-authored-by: techknowlogick <matti@mdranta.net>

Currently, Artifact does not have an expiration and automatic cleanup

mechanism, and this feature needs to be added. It contains the following

key points:

- [x] add global artifact retention days option in config file. Default

value is 90 days.

- [x] add cron task to clean up expired artifacts. It should run once a

day.

- [x] support custom retention period from `retention-days: 5` in

`upload-artifact@v3`.

- [x] artifacts link in actions view should be non-clickable text when

expired.

Just like `models/unittest`, the testing helper functions should be in a

separate package: `contexttest`

And complete the TODO:

> // TODO: move this function to other packages, because it depends on

"models" package

Cargo registry-auth feature requires config.json to have a property

auth-required set to true in order to send token to all registry

requests.

This is ok for git index because you can manually edit the config.json

file to add the auth-required, but when using sparse

(setting index url to

"sparse+https://git.example.com/api/packages/{owner}/cargo/"), the

config.json is dynamically rendered, and does not reflect changes to the

config.json file in the repo.

I see two approaches:

- Serve the real config.json file when fetching the config.json on the

cargo service.

- Automatically detect if the registry requires authorization. (This is

what I implemented in this PR).

What the PR does:

- When a cargo index repository is created, on the config.json, set

auth-required to wether or not the repository is private.

- When the cargo/config.json endpoint is called, set auth-required to

wether or not the request was authorized using an API token.

The web context (modules/context.Context) is quite complex, it's

difficult for the callers to initialize correctly.

This PR introduces a `NewWebContext` function, to make sure the web

context have the same behavior for different cases.

- Resolves https://codeberg.org/forgejo/forgejo/issues/580

- Return a `upload_field` to any release API response, which points to

the API URL for uploading new assets.

- Adds unit test.

- Adds integration testing to verify URL is returned correctly and that

upload endpoint actually works

---------

Co-authored-by: Gusted <postmaster@gusted.xyz>

Replace #22751

1. only support the default branch in the repository setting.

2. autoload schedule data from the schedule table after starting the

service.

3. support specific syntax like `@yearly`, `@monthly`, `@weekly`,

`@daily`, `@hourly`

## How to use

See the [GitHub Actions

document](https://docs.github.com/en/actions/using-workflows/events-that-trigger-workflows#schedule)

for getting more detailed information.

```yaml

on:

schedule:

- cron: '30 5 * * 1,3'

- cron: '30 5 * * 2,4'

jobs:

test_schedule:

runs-on: ubuntu-latest

steps:

- name: Not on Monday or Wednesday

if: github.event.schedule != '30 5 * * 1,3'

run: echo "This step will be skipped on Monday and Wednesday"

- name: Every time

run: echo "This step will always run"

```

Signed-off-by: Bo-Yi.Wu <appleboy.tw@gmail.com>

---------

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: techknowlogick <techknowlogick@gitea.io>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Co-authored-by: Lunny Xiao <xiaolunwen@gmail.com>

- Add a new `CreateSecretOption` struct for creating secrets

- Implement a `CreateOrgSecret` function to create a secret in an

organization

- Add a new route in `api.go` to handle the creation of organization

secrets

- Update the Swagger template to include the new `CreateOrgSecret` API

endpoint

---------

Signed-off-by: appleboy <appleboy.tw@gmail.com>

## Archived labels

This adds the structure to allow for archived labels.

Archived labels are, just like closed milestones or projects, a medium to hide information without deleting it.

It is especially useful if there are outdated labels that should no longer be used without deleting the label entirely.

## Changes

1. UI and API have been equipped with the support to mark a label as archived

2. The time when a label has been archived will be stored in the DB

## Outsourced for the future

There's no special handling for archived labels at the moment.

This will be done in the future.

## Screenshots

Part of https://github.com/go-gitea/gitea/issues/25237

---------

Co-authored-by: delvh <dev.lh@web.de>

Co-authored-by: wxiaoguang <wxiaoguang@gmail.com>

Fix#26129

Replace #26258

This PR will introduce a transaction on creating pull request so that if

some step failed, it will rollback totally. And there will be no dirty

pull request exist.

---------

Co-authored-by: Giteabot <teabot@gitea.io>

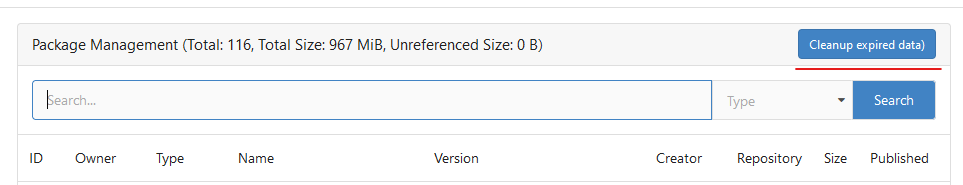

Until now expired package data gets deleted daily by a cronjob. The

admin page shows the size of all packages and the size of unreferenced

data. The users (#25035, #20631) expect the deletion of this data if

they run the cronjob from the admin page but the job only deletes data

older than 24h.

This PR adds a new button which deletes all expired data.

---------

Co-authored-by: silverwind <me@silverwind.io>

Follow #25229

Copy from

https://github.com/go-gitea/gitea/pull/26290#issuecomment-1663135186

The bug is that we cannot get changed files for the

`pull_request_target` event. This event runs in the context of the base

branch, so we won't get any changes if we call

`GetFilesChangedSinceCommit` with `PullRequest.Base.Ref`.

I noticed that `issue_service.CreateComment` adds transaction operations

on `issues_model.CreateComment`, we can merge the two functions and we

can avoid calling each other's methods in the `services` layer.

Co-authored-by: Giteabot <teabot@gitea.io>

- The user renaming function has zero test coverage.

- This patch brings that up to speed to test for various scenarios and

ensure that in a normal workflow the correct things has changed to their

respective new value. Most scenarios are to ensure certain things DO NOT

happen.

(cherry picked from commit 5b9d34ed11)

Refs: https://codeberg.org/forgejo/forgejo/pulls/1156

Co-authored-by: Gusted <postmaster@gusted.xyz>

- Just to get 100% coverage on services/wiki/wiki_path.go, nothing

special. This is just an formality.

(cherry picked from commit 6b3528920f)

Refs: https://codeberg.org/forgejo/forgejo/pulls/1156

Co-authored-by: Gusted <postmaster@gusted.xyz>

In the original implementation, we can only get the first 30 records of

the commit status (the default paging size), if the commit status is

more than 30, it will lead to the bug #25990. I made the following two

changes.

- On the page, use the ` db.ListOptions{ListAll: true}` parameter

instead of `db.ListOptions{}`

- The `GetLatestCommitStatus` function makes a determination as to

whether or not a pager is being used.

fixed#25990

The API should only return the real Mail of a User, if the caller is

logged in. The check do to this don't work. This PR fixes this. This not

really a security issue, but can lead to Spam.

---------

Co-authored-by: silverwind <me@silverwind.io>

Attemp fix: #25744

Fixing the log level when we delete any repo then we get error hook not

found by id. That should be warn level to reduce the noise in the logs.

---------

Co-authored-by: delvh <dev.lh@web.de>

- The `NoBetterThan` function can only handle comparisons between

"pending," "success," "error," and "failure." For any other comparison,

we directly return false. This prevents logic errors like the one in

#26121.

- The callers of the `NoBetterThan` function should also avoid making

incomparable calls.

---------

Co-authored-by: yp05327 <576951401@qq.com>

Co-authored-by: puni9869 <80308335+puni9869@users.noreply.github.com>

This PR

- Fix#26093. Replace `time.Time` with `timeutil.TimeStamp`

- Fix#26135. Add missing `xorm:"extends"` to `CountLFSMetaObject` for

LFS meta object query

- Add a unit test for LFS meta object garbage collection

- cancel running jobs if the event is push

- Add a new function `CancelRunningJobs` to cancel all running jobs of a

run

- Update `FindRunOptions` struct to include `Ref` field and update its

condition in `toConds` function

- Implement auto cancellation of running jobs in the same workflow in

`notify` function

related task: https://github.com/go-gitea/gitea/pull/22751/

---------

Signed-off-by: Bo-Yi Wu <appleboy.tw@gmail.com>

Signed-off-by: appleboy <appleboy.tw@gmail.com>

Co-authored-by: Jason Song <i@wolfogre.com>

Co-authored-by: delvh <dev.lh@web.de>